1. AI revenue growth exceeded expectations, long-term sustainability is clear

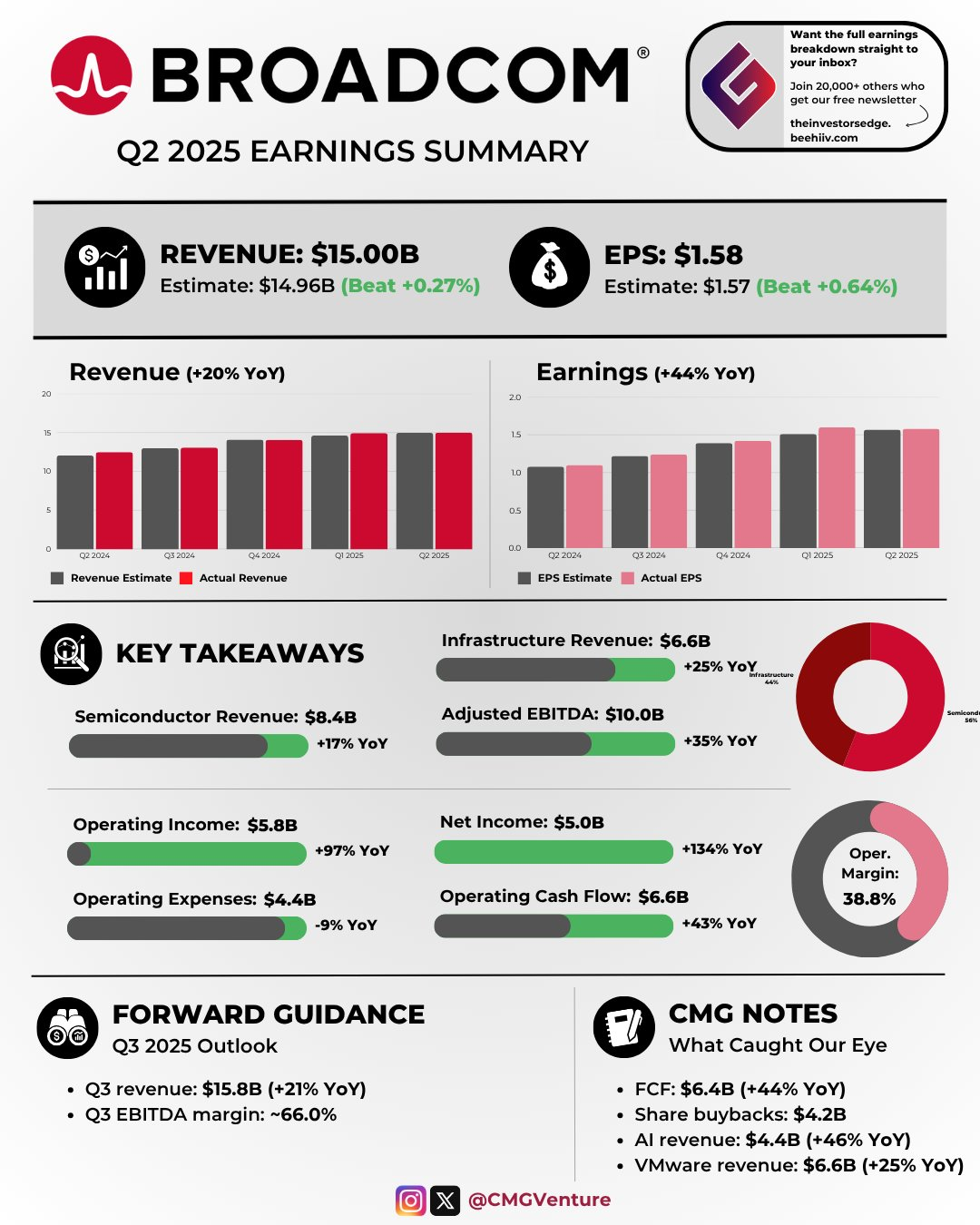

Data performance: $Broadcom(AVGO)$ Q2 AI-related revenue reached $4.4 billion, Q3 guidance of $5.1 billion (+60% year-on-year), management clear 2025 high growth will continue to 2026.AI revenues may reach $20B in 2025 and exceed $30B in 2026 by analyst projections.

Key signals: management's confirmation of 2026 growth trajectory ("same trajectory") indicates high order visibility and no downward revision of 2027 targets, reflecting its confidence in the long-term nature of AI demand.

Potential risk: visibility is not updated beyond 2026 for the time being, and attention needs to be paid to whether there are subsequent technology iterations or changes in customer demand (e.g., inference arithmetic bottleneck easing).

2. reasoning demand acceleration, or become the main driver of future arithmetic

Exceeded expectations: Broadcom for the first time to emphasize the demand for reasoning (previously training-based), customers for the realization (ROI) to accelerate the deployment of reasoning chips, is expected to surge demand for XPU in the second half of 2026.

Industry Background:

Anthropic $Amazon.com(AMZN)$ and others are predicting a shortage of reasoning power and a rapid increase in token consumption for closed-source models (e.g. GPT-4, Claude).

If the rule of "short-term optimism, long-term pessimism" applies, actual inference demand may far exceed current expectations.

Impact: Increase in the proportion of inference may change the structure of chip demand (e.g., increase in the proportion of ASICs), which is favorable to Broadcom and other customized chip suppliers.

3. Competitive advantage of ASIC: hardware and software co-optimization > low cost alone

Core Management Perspective:

The value of ASICs lies not only in cost savings, but also in the deep co-optimization of closed-source models with hardware ( e.g., algorithms bound to silicon), which can significantly improve LLM performance.

Example: Google TPU has demonstrated this advantage, while AWS/Microsoft/OpenAI are still exploring it.

Industry Trend Extrapolation:

If closed-source model architectures continue to diverge (vs. open-source), GPU general-purpose advantage may weaken and ASIC customization demand will increase.

However, it should be noted that: $NVIDIA(NVDA)$ CUDA ecosystem is still difficult to replace, ASIC penetration is a gradual process ("need to be optimized for many iterations").

4. Scale-up technology: optical interconnect and Ethernet dominate

Technology path:

Copper → Optical Interconnect: 72 GPU+ clusters need to move to optical interconnect (Broadcom does not explicitly support CPO or pluggable modules).

Protocol standardization: Ethernet will become Scale-up mainstream (against new standards) due to its historical compatibility and transmission efficiency.

Business impact: Broadcom, as the Ethernet chip leader, will benefit from this trend, but need to pay attention to the substitution risk of competitors (e.g., NVIDIA's InfiniBand) in specific scenarios.

5. Competitive landscape: Broadcom's leading barrier

Analyst attitude: no one questions the risk of order loss, reflecting Broadcom's irreplaceability in the field of AI chips (especially customized ASICs) and networking chips.

Moat:

Technology integration capability: full chain advantage from silicon design to software optimization.

Customer binding: Long-term cooperation with cloud giants (e.g. $Alphabet(GOOGL)$ AWS).

Conclusion

AI revenue certainty is high: Broadcom's 2025-2026 growth is not at risk, but need to track post-2026 visibility updates.

Inference + ASIC is the alpha source: inference demand explosion and ASIC penetration rate increase may bring excess returns, pay attention to the progress of closed-source model.

Technology bets are correct: the layout of optical interconnection and Ethernet is in line with the general direction of the industry, but we need to be vigilant about the divergence of technology routes (e.g. CPO delayed commercialization).

Risk tips: NVDA ecological counterattack, ASIC customer self-research (e.g. AWS Graviton), inference arithmetic excess.

Comments